95% of GenAI projects fail. How to become part of the 5%

AI has entered its "hard-hat" era. The next 12 months will separate companies driving real P&L impact from those still stuck in pilot limbo. However, here's what the statistics don't show: many AI projects aren't failing due to bad technology; they're failing because they were performative from the start. Built to demonstrate innovation rather than solve business problems.

Numbers Don't Lie

An MIT Media Lab report found that 95% of investments in gen AI have produced zero returns.

Simultaneously, Forrester’s Predictions of 2026 makes the “market correction” explicit: only 15% of AI decision-makers reported an EBITDA lift in the past 12 months, and fewer than one-third can tie AI value to P&L changes. As CFOs get pulled deeper into AI decisions, Forrester expects enterprises to delay 25% of planned AI spend into 2027.

What the Winners Did Differently

The 5% that succeeded didn't start with "let's use AI." They started with a business problem:

Mastercard built a digital assistant to simplify and accelerate customer onboarding, in collaboration with Databricks, designed to continuously learn from feedback under strong governance.

AT&T is using GenAI to modernize fraud prevention on a governed data foundation and reports major impact from their broader fraud platform efforts (including large reductions in fraud attacks).

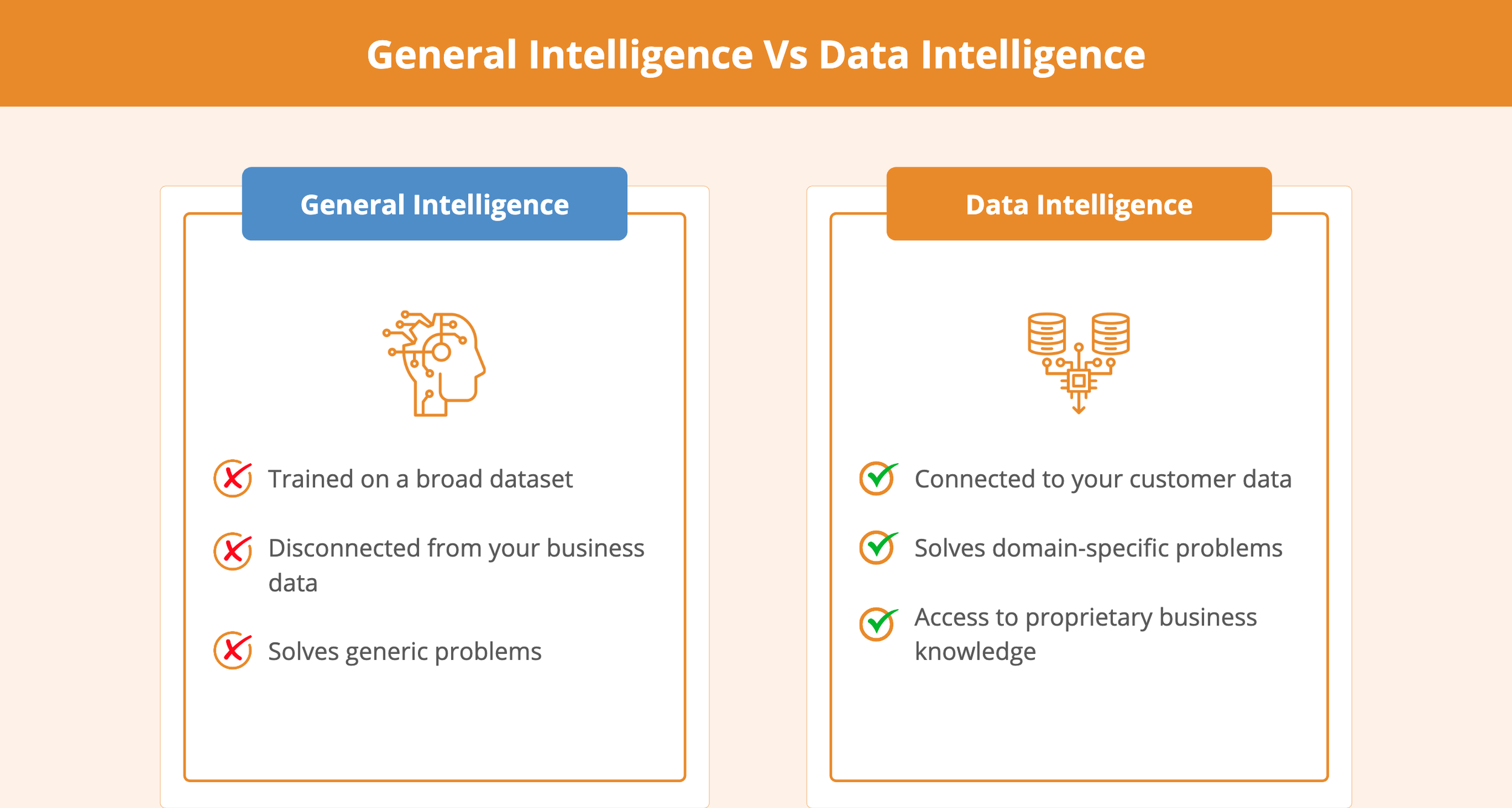

The differentiator? They’re bridging the gap between general intelligence (what LLMs know) and data intelligence (what your business knows). And, critically, they treated AI like any other infrastructure investment, with clear success metrics, proper governance, and business ownership.

The Performance vs. Production Gap

Here's the pattern I see in most AI projects: teams excitedly prototype a new AI assistant, impress stakeholders in a demo, then hit a wall trying to get it production-ready.

A standalone ChatGPT-like model might work in a demo, but it's disconnected from your company's data, context, and operational constraints. To solve real domain-specific problems, AI needs to tap into your customer data, documents, and APIs, it needs data intelligence.

That's when enterprises discover they've been optimizing for demos that impress, not systems that ship.

The 5 Failure Modes (and how to avoid them)

Every stalled AI project I've seen falls into at least one of these traps:

1) No CFO-proof value story

Teams start with “cool capability,” not a measurable business outcome.

Forrester’s numbers make the consequence clear: many organizations still can’t connect AI value to P&L, which triggers budget delays and tighter scrutiny.

2) The “general intelligence vs. enterprise reality” gap

A chatbot demo works… until it hits enterprise constraints: permissions, policies, data silos, compliance, and accountability.

If the agent can’t safely access the right data and tools, you get either:

generic answers (no value), or

unacceptable risk (no deployment)

The most important thing is to run your agent in an environment that can have your data (Lakehouse), and the data is prepared for AI usage (data engineers implemented a business semantic model)

3) DIY agent building creates a hidden complexity tax

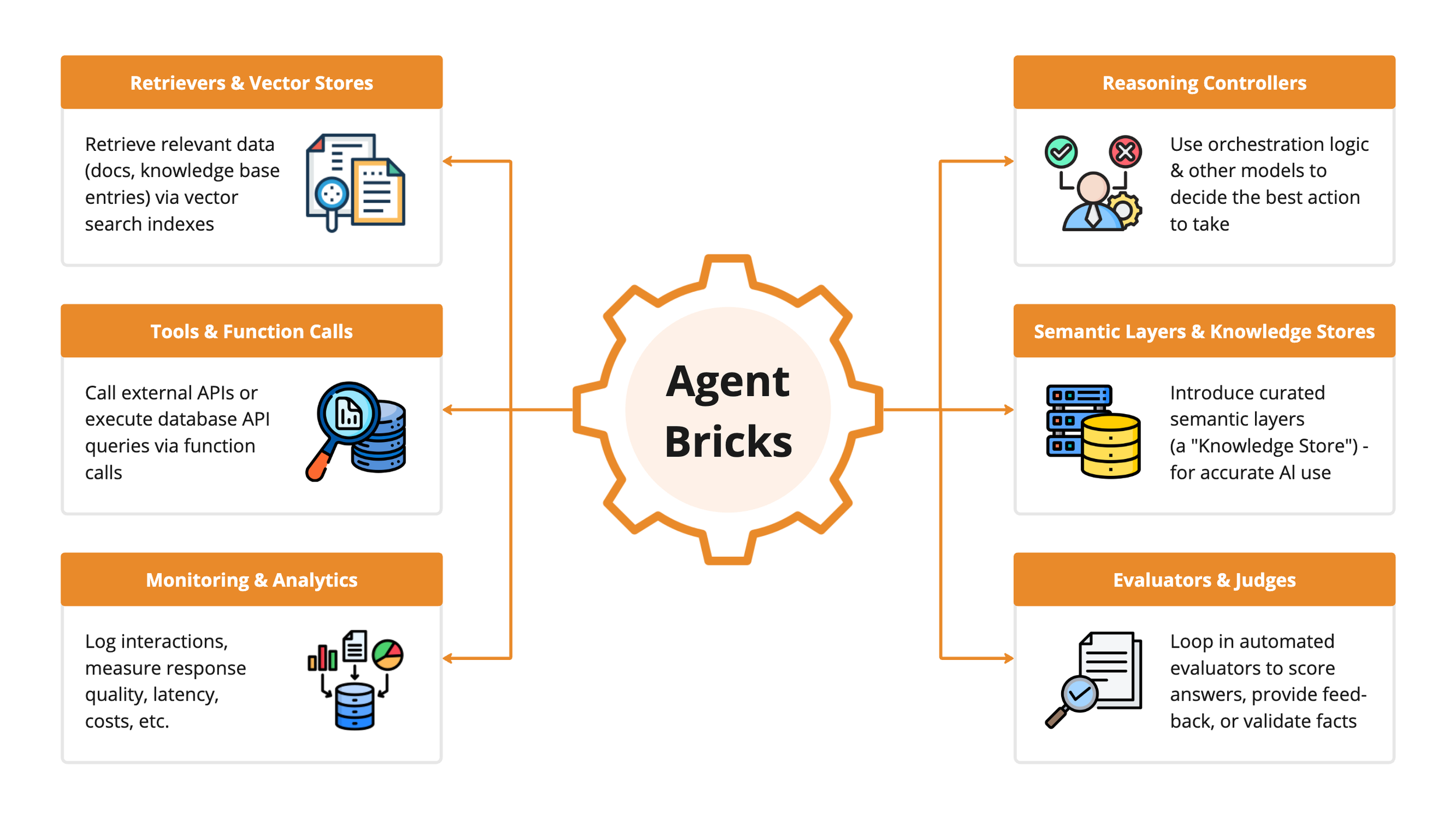

Modern “agents” aren’t a prompt — they’re a system: data access, tool execution, governance, evaluation, monitoring, and cost controls. No objective quality + cost discipline.

Databricks calls out why projects stall: evaluation is hard, there are too many knobs, and cost/quality trade-offs surprise teams late in the process.

4) No objective quality + cost discipline

In the “hard-hat” era, “vibe checks” don’t ship.

If you can’t measure quality, you can’t scale. If you can’t control costs, you can’t expand usage.

Databricks explicitly frames quality and cost as primary barriers keeping agentic experiments from reaching production.

5) Adoption stalls without AI literacy

Forrester also predicts that 30% of large enterprises will mandate AI training to lift adoption and reduce risk, and notes that 21% of AI decision-makers cite employee experience/readiness as a barrier.

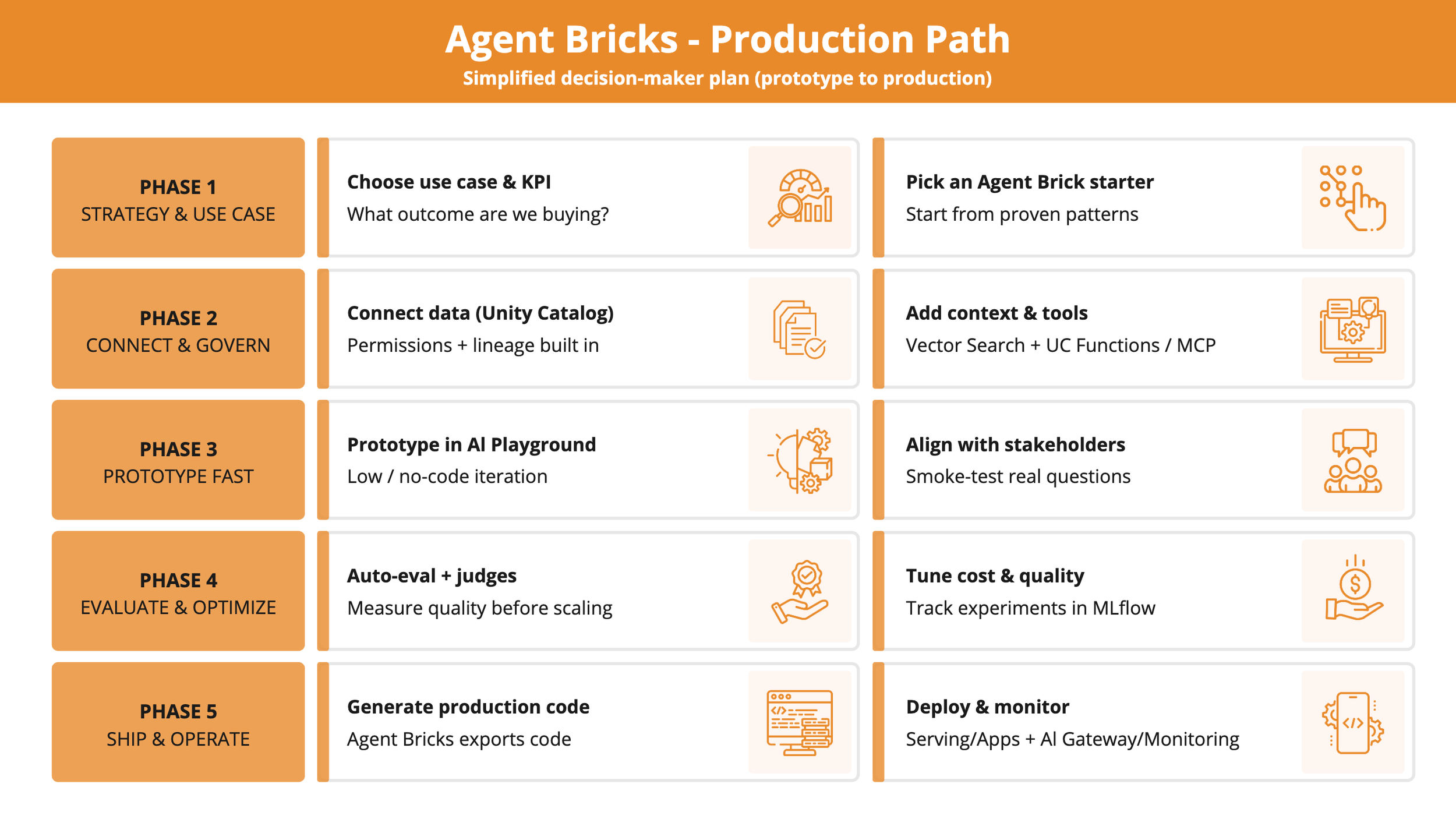

Enter Agent Bricks, a “production path,” not a magic button

This is where Databricks Agent Bricks comes in, not as the solution to your AI problems, but as a framework that forces the right discipline.

Think of Agent Bricks as infrastructure that makes it harder to skip the boring-but-critical work. It's a low-code factory for AI agents that generates customizable code, built on the same platform as your Lakehouse.

Instead of fighting with hundreds of disconnected tools, Agent Bricks provides:

Integrated data access with built-in governance

Evaluation frameworks that make quality measurement default, not optional

Cost controls that are visible from the start

Production deployment paths that don't require rebuilding

Don’t “tool” your way out of a people problem

If you want adoption (and you want to reduce risk), treat AI like a capability rollout, not a feature launch.

Forrester explicitly recommends formalized training and even suggests partnering with an AI service provider or existing technology provider to deliver it, with clear success metrics.

You don't need a massive transformation program. But you do need:

Baseline AI literacy across leaders and users

Role-based enablement (builders vs. business users vs. risk)

Clear definitions of "good," "safe," and "useful" in your context

Call to action

The 95% failure rate isn't primarily a technology problem; it's a discipline problem. Companies that succeed stop treating AI as performative innovation and start treating it as infrastructure that needs:

Clear business outcomes

Proper governance

Objective evaluation

Cost discipline

User enablement

Agent Bricks won't create a good use case for you. But it provides the framework to execute on good use cases without getting stuck in the gap between prototype and production.

The question is: are you ready to stop performing AI innovation and start shipping it?