Query Your Lakehouse In Under 1 ms.: From OLAP to OLTP

I have 1 million transactions in my Delta file, and I would like to process one transaction in milliseconds (SELECT * WHERE id = y LIMIT 1). This seemingly straightforward requirement presents a unique challenge in Lakehouse architectures.

The Lakehouse Dilemma: Built for Bulk, Not Speed

Lakehouse architectures excel at what they're designed for. With files stored in cloud storage (typically around 1 GB each), they leverage distributed computing to perform lightning-fast whole-table scans and aggregations. However, when it comes to retrieving a single row, performance can be surprisingly slow.

OLTP (Online Transaction Processing) vs OLAP (Online Analytical Processing)

The fundamental issue is architectural: Lakehouses are optimized for bulk analytical throughput, not point lookups. Getting a single-row query to execute in milliseconds—or even under one second—can be extremely challenging.

Why Millisecond Performance Matters

You might wonder, “Why is sub-millisecond performance so critical?” The answer lies in real-world applications:

Online inference: Machine learning models serving predictions in real-time

Customer-facing applications: Think of your favorite supermarket app generating personalized vouchers instantly

So, how do we do it? Create an OLTP instance.

The answer is to create an OLTP (Online Transaction Processing) database instance on top of your existing Lakehouse architecture. This approach gives you the best of both worlds: the analytical power of your Lakehouse with the speed of traditional OLTP systems like PostgreSQL.

Configuration Options

When setting up your OLTP instance, you'll encounter several key configuration options:

Capacity units: Each unit represents 16GB of RAM. Scale according to your performance needs.

Restore window: Enables automatic backups and database cloning for experimentation (think "Git for databases")

High availability (HA): Creates a mirrored instance for redundancy and can serve as an additional read replica to boost performance.

Setting Up Synced Tables in Unity Catalog

To make your Delta tables accessible through the OLTP instance, you'll need to create synced tables in Unity Catalog.

Here, we need to assign a name to the synced table that will be queried through OLTP.

Since primary keys in Delta, which uses files on cloud storage, are not guaranteed to be good practice, it is recommended to deduplicate them based on ID and timestamp (also known as SCD type 1), which is automatically handled here.

But in a scenario where you have appended only the Delta table and the ID is specified as ID BIGINT GENERATED ALWAYS AS IDENTITY, it is pretty safe to leave the option primary key is unique.

Our not-enforced, information-only Primary Key in Delta will become a real Primary Key Index in Postgres, which will be used for fast lookups.

Once configured, Unity Catalog will display additional metadata showing that your table is running on PostgreSQL, complete with primary key information.

Query Performance Results

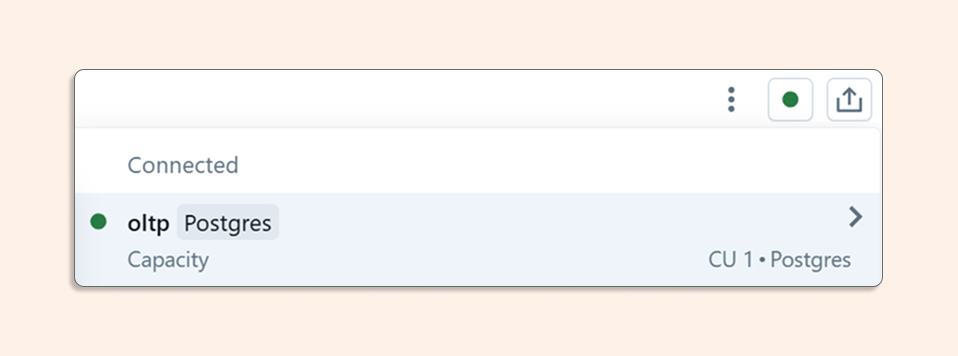

In the SQL editor, you can now connect to your OLTP database and see both your synced tables and PostgreSQL system catalogs.

Once attached to Postgres in the catalog preview on the left pane, we see our synced table, plus the Postgres catalogs.

And now we can run SELECT on our transactions table to get just one row:

Under 1 ms. Excellent!

Before you begin

Before implementing this solution, note that:

OLTP functionality is available only in selected regions

You may need to enable it in Previews within your Databricks workspace

Conclusion

By combining the analytical power of Databricks' Lakehouse architecture with the point-lookup performance of OLTP systems, you can achieve the millisecond query performance required for modern, customer-facing applications while maintaining your existing analytical workflows.

This hybrid approach represents the evolution of data architecture: no longer do you need to choose between analytical power and transactional speed—you can have both.