The Hidden Benefits of Databricks' Managed Tables

Databricks manages the entire life cycle of managed tables, resulting in no “zombies” files after DROP operations. There’s also a significant benefit in predictive optimization, while you can theoretically optimize the timing for OPTIMIZE, ANALYZE, and VACUUM operations manually, it’s extremely difficult in practice. Managed tables provide an additional advantage that impacts both performance and cost.

Since Databricks assumes that managed tables are modified only by Databricks itself, it can cache references to all Parquet files used in Delta Lake and avoid expensive list operations. This is a theory, but I decided to test it in practice.

I created two identical tables that differ only in their storage location and populated them with the same number of rows:

CREATE TABLE transactions_external -- name transactions_managed ( txn_id BIGINT, txn_ts TIMESTAMP, account_id INT, amount DECIMAL(10,2), txn_type STRING, note STRING, txn_date DATE GENERATED ALWAYS AS (CAST(txn_ts AS DATE)) ) PARTITIONED BY (txn_date) LOCATION 'abfss://unity@westus2uc.dfs.core.windows.net/transactions_ext' -- that line skipped for maned table TBLPROPERTIES ( delta.autoOptimize.autoCompact = false, delta.autoOptimize.optimizeWrite = false ); -- Insert identical data to the external table INSERT INTO transactions_external (txn_id, txn_ts, account_id, amount, txn_type, note) SELECT * FROM tx_1mln; -- I did some tests without cache SET use_cached_result = false; -- test statement SELECT COUNT(*) FROM transactions_managed; SELECT COUNT(*) FROM transactions_external;

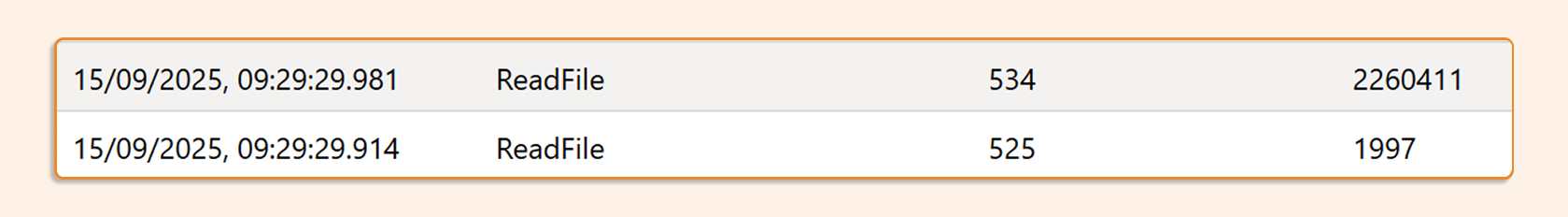

I ran count operations on both tables with caching disabled to ensure accurate measurements. Here’s the clock for the external tables:

On most query plans, we can observe that managed tables are slightly faster.

However, I decided go one level deeper and configured storage logs to be sent to Log Analytics in Azure. Note: Only enable this for debugging purposes; Log Analytics can be very expensive per GB in production environments. If you want to try this, you’ll find this option in the “Diagnostic setting” of your storage account:

I then ran a simple query to analyze recent storage operations. I ran all counts in different minutes to spot the issue.

StorageBlobLogs

| where TimeGenerated > ago(30m)

| project TimeGenerated,

OperationName,

ResponseHeaderSize,

ResponseBodySize

| order by TimeGenerated desc

Here’s the managed table:

And here is the external table:

The Key Difference

The critical difference lies in the ListFilesystemDir operations. External tables require additional directory listing operations that managed tables can avoid through caching.

While each storage operation costs only a small amount, these ListFilesystemDir operations can accumulate into significant additional costs for external tables, especially at scale.

Managed tables not only provide operational simplicity but also deliver measurable performance improvements and cost savings through optimized storage access patterns.