How to Simplify Databricks Asset Bundles with Policy Inheritance

Databricks recently introduced an enhanced policy framework that streamlines cluster configuration management. The new policy forms make it easier to create policies with default values, reducing code duplication and simplifying deployments across your organization.

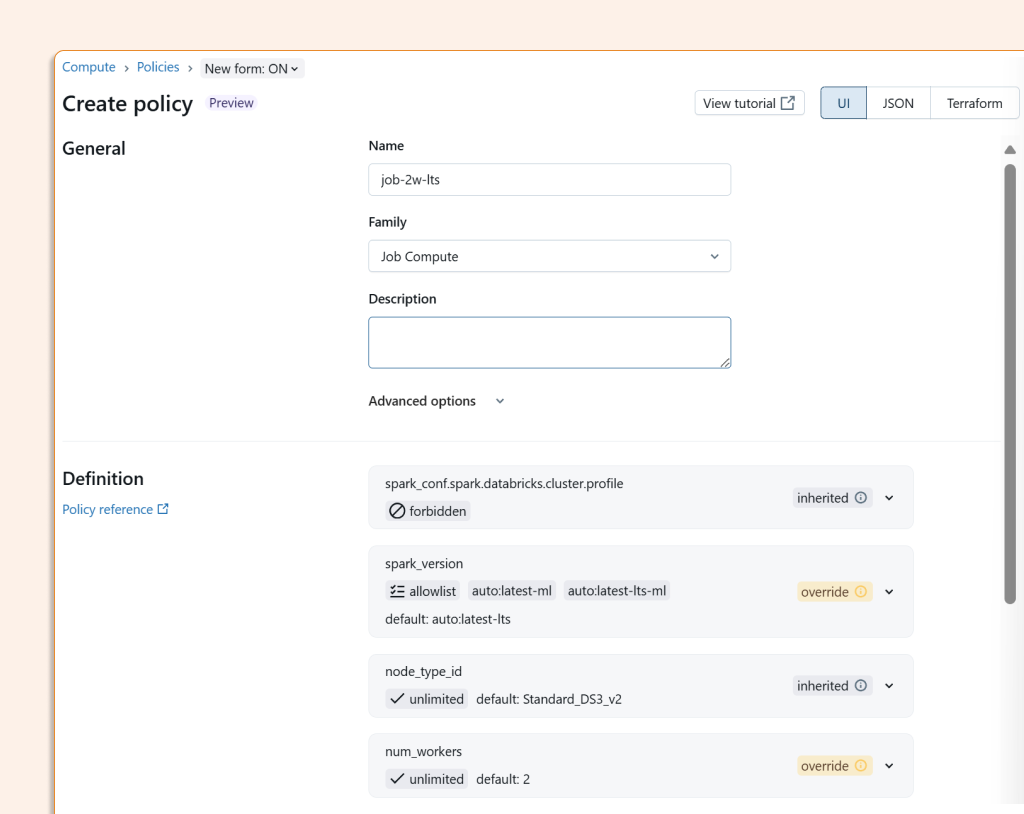

Setting up policies with default values

With the new policy forms, you can define default values directly within your policies. For example, you can create a job policy that automatically sets:

Databricks runtime to the latest LTS version

Number of workers to 2

Any other cluster configurations your team commonly uses

This ends the need to specify these values repeatedly across different job definitions.

The game-changer: Deploying with default values

Once you've configured default and fixed settings in your policy, you can leverage a powerful new feature: deploying compute resources using only the policy reference.

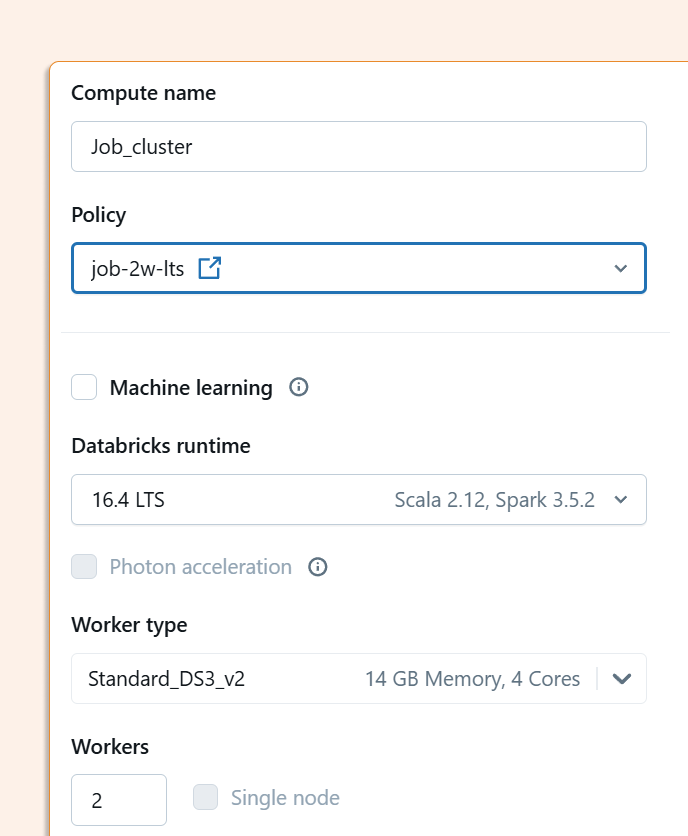

In the Databricks UI:

When you select a policy in the UI, it automatically populates the cluster configuration with your predefined defaults. The experience is great: the cluster form simply reflects the values you've already established in the policy.

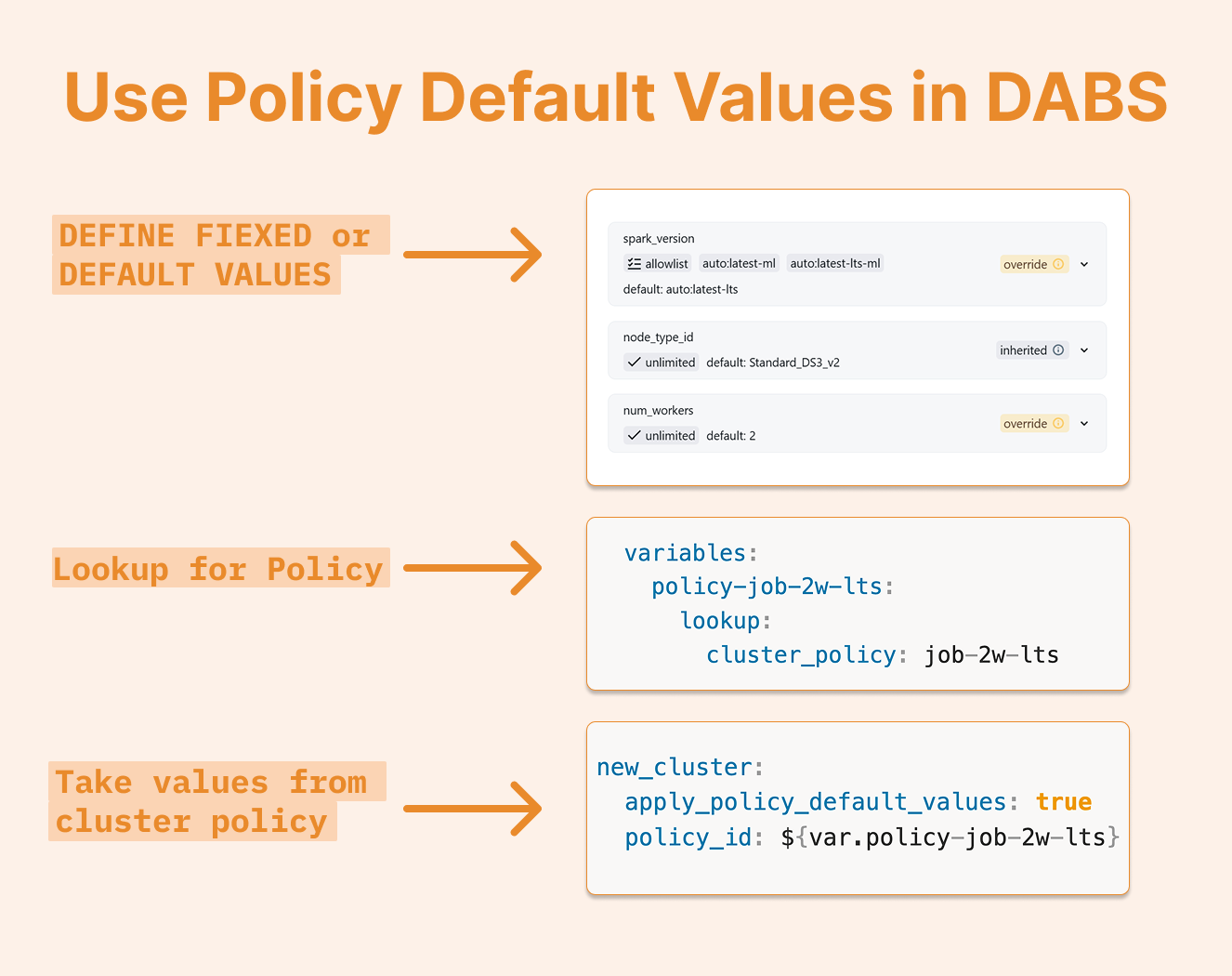

In Databricks Asset Bundles:

Here's where things get really interesting. For job clusters deployed via Asset Bundles, you can now use minimal YAML configuration. If your policy defines all required parameters (node type, number of workers, runtime) as either default or fixed values, your bundle configuration becomes quite simple:

variables:

policy-job-2w-lts:

lookup:

cluster_policy: job-2w-lts

resources:

jobs:

job_with_defaults:

job_clusters:

- job_cluster_key: Job_cluster

new_cluster:

apply_policy_default_values: true

policy_id: ${var.policy-job-2w-lts}

The apply_policy_default_values:true setting instructs Databricks to populate your cluster configuration with all default and fixed values from the referenced policy. The lookup variable allows you to reference policies by name rather than hardcoding IDs, making your bundles more portable across workspaces.

A new architecture pattern

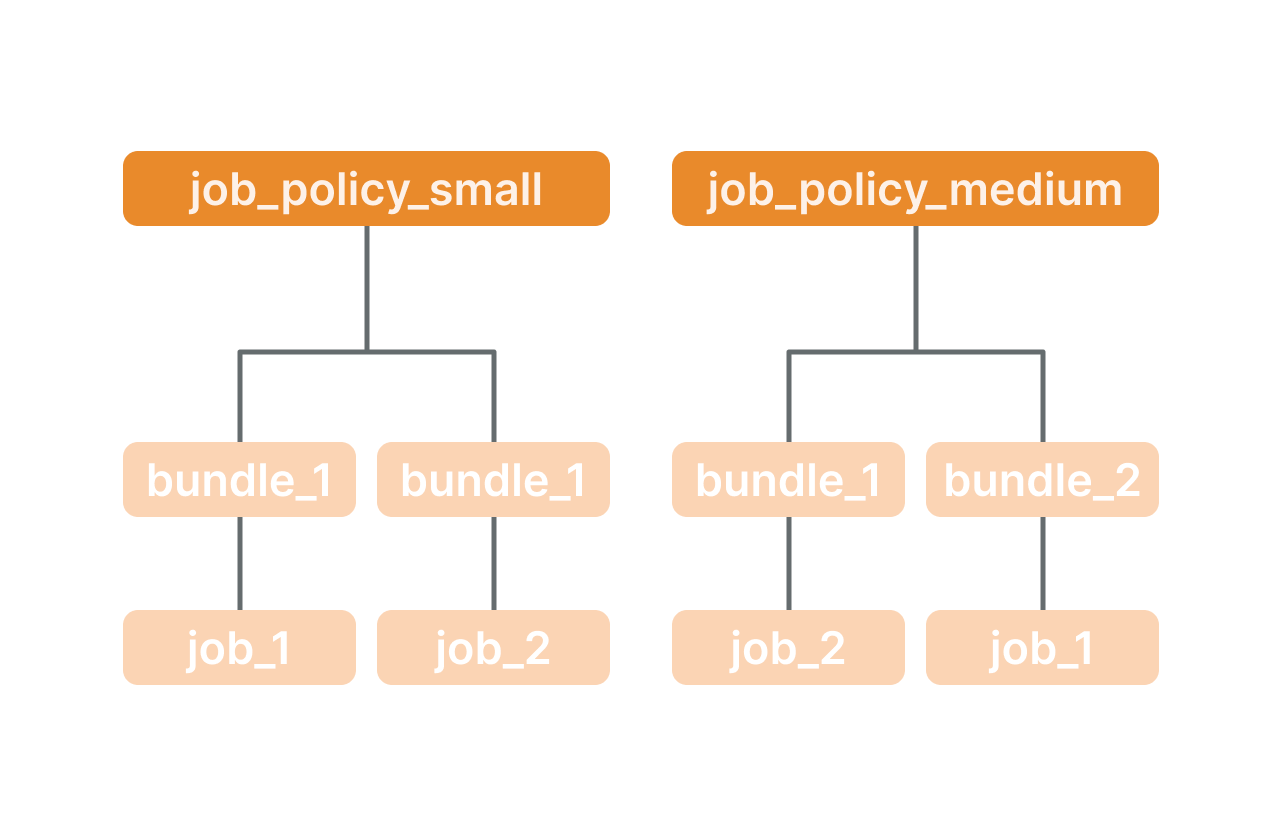

This capability opens up powerful architectural patterns for managing Databricks deployments:

Standardized tiers: Define policies like

job_cluster_small,job_cluster_medium, andjob_cluster_largewith appropriate configurations for different workload sizesEnvironment-specific policies: Create separate policies for development, staging, and production with different performance and cost characteristics

Team-specific configurations: Set up policies tailored to different teams' requirements and governance rules

Your Asset Bundles simply inherit from these policies, avoiding code repetition and centralizing configuration management. When you need to update a standard configuration, you modify the policy once rather than updating dozens of bundle files.

Interactive clusters too

This approach isn't limited to job clusters. If you're deploying interactive clusters through Asset Bundles (under resources.clusters), you can use the same pattern with apply_policy_default_values to inherit policy configurations.

By using policy default values, you write less code, enforce standards centrally, and update configurations in one place instead of across several bundle files. Your team can deploy clusters faster without understanding every parameter, and governance happens through policy enforcement rather than hoping developers configure things correctly.