Stop ELT Headaches: Why We Partner with Fivetran + Databricks

Not long ago, I found myself staring at a failing ELT job late on a Friday night. The pipeline had broken—again—because a source system changed an API and my custom script couldn't keep up. After years of working with all kinds of data integration tools, from heavy-duty enterprise ELT suites to fragile DIY pipelines, I knew there had to be a better way.

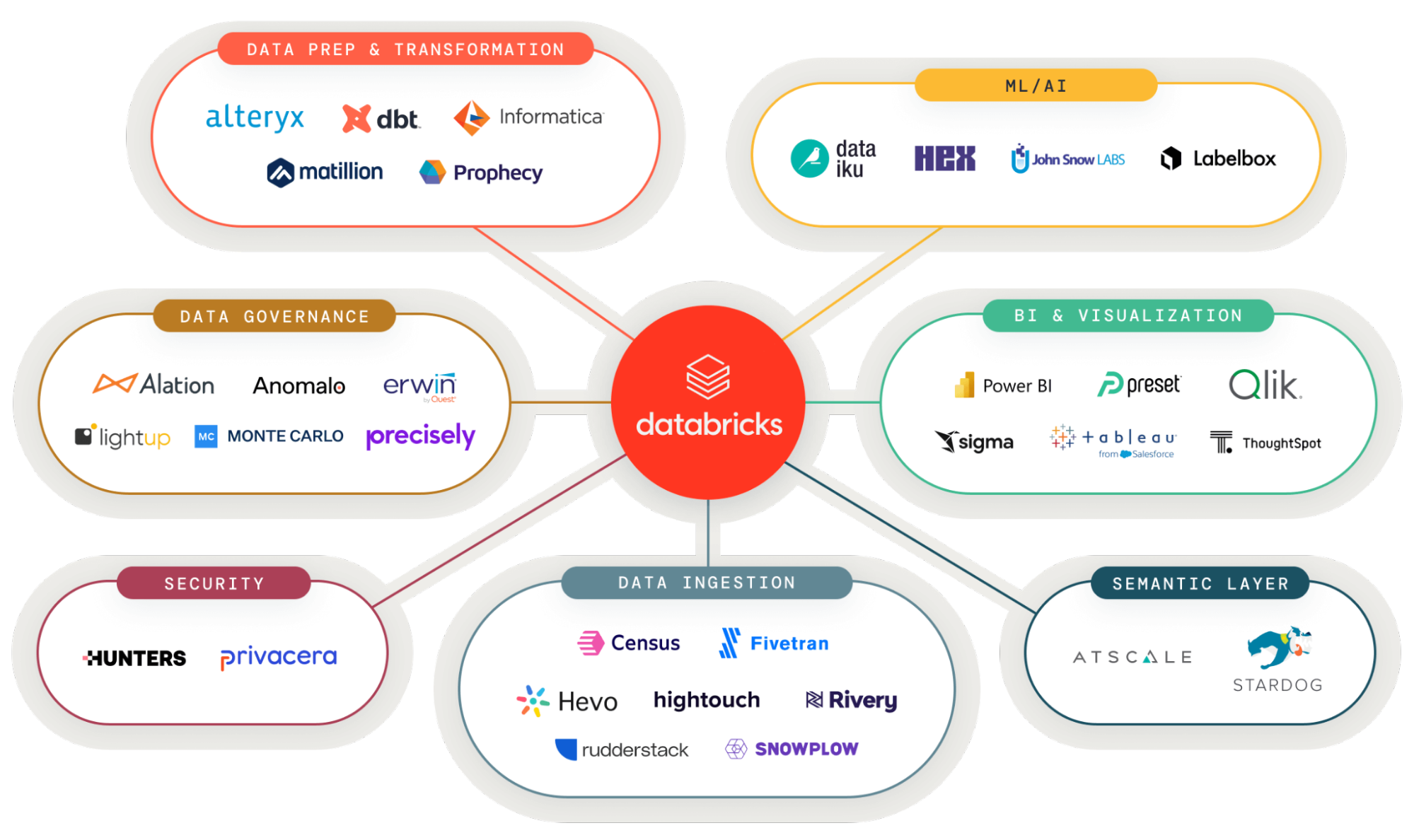

The World of Accelerators

In today's data-driven business environment, the ability to integrate, transform, and analyze data efficiently stands as a fundamental pillar of success. Achieving these objectives requires not only the right knowledge but also tools that automate and accelerate these processes.

Source: Fivetran

These tools, which reduce implementation times, automate tasks, and minimize maintenance, are known as "accelerators." The market offers a multitude of them: some focus on data observability (like Monte Carlo), others specialize in data integration (such as Fivetran), and others in data exploitation (like Sigma).

An accelerator is any tool that helps you reach your objectives faster. You could always build your own solution, but why would you? Developing custom integrations is technically feasible, but companies often end up with fragmented ecosystems that are difficult to maintain.

Chasing the Perfect Data Integration Tool

When I reflect on my early projects, I recall wrestling with traditional ELT tools like IBM DataStage and writing countless Python scripts scheduled via Airflow. Each tool taught me something: DataStage showed me power (but at the cost of complexity), and custom scripting gave flexibility (but demanded constant upkeep).

Cloud-native services like Azure Data Factory and AWS Glue promised scalability, yet still required us to manage connectors or handle schema changes manually. In every case, a pattern emerged – too much time spent fixing and tweaking pipelines, not enough time delivering insights. I remember a particular project where an API change in a SaaS CRM system caused days of troubleshooting. It was a wake-up call. I began searching for a solution that was simple enough not to require constant babysitting, yet reliable enough to trust with enterprise data.

That search led us to evaluate Fivetran alongside other options. Frankly, I was skeptical at first. Could a managed service really handle the messy work of data integration better than our hand-crafted pipelines? The answer turned out to be a resounding yes.

Fivetran: The Plumber of Big Data

I recall reading a Forbes article a few years ago that described Fivetran as the "plumber" of Big Data—a comparison I find particularly apt. Fivetran makes a lot of sense; by democratizing integrations to your data platform, you could achieve an enormous ROI.

Fivetran greatly accelerates the process of data ingestion in a reliable and secure manner. With pre-built integrations for multiple sources, including those that are typically complex, such as the SAP ecosystem, it allows users to focus on analysis and strategic decision-making rather than spending valuable time on data integration. This accelerator offers a library of roughly 690 connectors, covering everything from traditional databases and enterprise applications to file systems and event platforms.

Source: Fivetran

It's essential to understand that Fivetran is not merely a connector; it is a comprehensive solution that covers the entire integration flow.

Why Fivetran Stands Out

After working with Fivetran, it became clear why it stood out from the pack. Here are a few key reasons we fell in love with it:

Simplicity and Ease of Use

Fivetran is a fully managed, no-code platform. Setting up a new data connector is as easy as clicking through a setup wizard – no custom code or infrastructure to manage. It offers pre-built connectors for hundreds of sources, ranging from SaaS apps to databases. We just select our data sources and target (Databricks Delta Lake, in our case), and Fivetran handles the rest. This plug-and-play approach drastically cuts down implementation time.

Reliable, "Set-and-Forget" Pipelines

Unlike custom pipelines that might break on schema changes, Fivetran's automated integration adapts as schemas and APIs evolve. If a source system adds a column or changes a field, Fivetran adjusts automatically, so our data keeps flowing without manual intervention. It's designed to ensure reliable data movement at high volume, letting us focus on insights rather than constantly babysitting ELT jobs. In other words, it just works – day in, day out, which gives our team peace of mind.

Robust ELT and Schema Handling

Fivetran follows an ELT philosophy (Extract-Load-Transform). It pulls data from sources and lands it in our Databricks environment as raw tables, ready for us to transform using the full power of Databricks. This separation means Fivetran's job is laser-focused on extraction and loading, and it does that job exceedingly well. It even handles schema changes gracefully by automatically evolving the target schema (adding new columns, for example) so that our analysts always see ready-to-query schemas in Databricks. No more pipeline crashes at 2 AM because a source system had a minor change.

Security and Governance Built In

As a managed service, Fivetran takes security seriously (data is encrypted in transit and at rest), but what really impressed us was its integration with Databricks governance features. Fivetran can natively integrate with Unity Catalog, Databricks' data governance layer, by automatically registering tables and schemas on every sync. This means the data it lands is immediately visible and governed under our central catalog – no extra steps required. For a consultancy like SunnyData, which often juggles multiple client workspaces, this feature is gold.

Delta Lake Compatibility

Fivetran doesn't just dump data into some generic format – it loads data into Delta Lake format on cloud storage, which is Databricks' native format for the Lakehouse. In fact, Fivetran has the ability to take data from its 650+ source connectors and land it directly to an Azure Data Lake or S3 in Delta format securely and reliably. For us, this means the ingested data immediately benefits from Delta Lake's ACID transactions, schema enforcement, and performance optimizations. It's essentially ingestion tailor-made for Databricks.

Fivetran + Databricks: A Perfect Match for the Modern Data Stack

One thing I quickly realized is how beautifully Fivetran complements Databricks. It's almost as if they were made for each other in a modern data architecture. At SunnyData, we specialize in building lakehouse architectures on Databricks – that means using open formats like Delta Lake, decoupling storage and compute, and enabling both BI and AI on the same platform. Fivetran fits into this picture as the data ingestion workhorse that makes sure all our source data arrives in the lakehouse reliably and up-to-date.

The integration between Fivetran and Databricks is very smooth. Databricks has even made it easy by including Fivetran in its Partner Connect integrations, so hooking up Fivetran to a Databricks workspace is straightforward. In practice, when we connect Fivetran to Databricks, we configure a Delta Lake destination. Under the hood, Fivetran will store data in our cloud object storage (S3, ADLS, etc.) in Delta format and create the corresponding tables in Databricks. With Unity Catalog in play, we specify which catalog and schema Fivetran should use, and it will create or use those, registering every table automatically.

A huge benefit of this automated integration is accelerated development cycles. In the past, a lot of project time was spent just ingesting data and making sure it's in a queryable state. Now, Fivetran takes care of that heavy lifting for us. Diverse source data (from CRM systems, operational databases, marketing analytics, you name it) is centralized into our Databricks Lakehouse with ease. Because Fivetran adapts to source changes on the fly, we don't get nasty surprises – our Databricks tables stay in sync, and our analysts can trust that the data is current. This frees us up to concentrate on downstream transformations, modeling, and analysis. Essentially, Fivetran feeds the lakehouse, and Databricks consumes that data to deliver insights.

When to Use Fivetran

Deciding when to use Fivetran is largely subjective, as its utility spans a wide array of scenarios. However, it is particularly valuable in environments where you must manage multiple integration sources. Moreover, when scalability, security, and compliance requirements are stringent, Fivetrann provides a comprehensive solution that meets these demands with robust and efficient integrations.

Source: Fivetran

Additionally, Fivetran is ideal for maintaining platform-agnostic data ingestion, which eases transitions between platforms—for instance, migrating from Snowflake to Databricks—without losing centralized transformation logic.

Real-World Validation: Tested and Trusted in Enterprise Projects

At SunnyData, we don't just take a vendor's word for it – we rigorously test tools in real-world projects before recommending them. Fivetran has passed that test with flying colors. Over the past year or so, we've deployed Fivetran + Databricks in multiple enterprise scenarios, and each time we've seen the benefits reaffirmed.

One example that stands out was a data platform modernization for a global retailer. They had a patchwork of legacy ELT jobs loading an on-prem data warehouse, and they wanted to move to Databricks on the cloud. We leveraged Fivetran to quickly pipe data from their legacy sources (including Oracle and SAP databases, plus several REST APIs) into Databricks. In the initial phase, we ran Fivetran in parallel with their old pipelines to ensure everything matched. The result was that within a few weeks, we had an entire replica of their data warehouse in Delta Lake, continuously updated via Fivetran, all while the old system was still running. It gave the business confidence to switch over, because they literally saw the data flowing reliably in both systems. And for us, using Fivetran meant we avoided writing dozens of individual ingestion scripts – we just configured connectors. The time savings and reduction in risk were enormous.

Another scenario was a cloud-to-cloud migration. One of our clients was moving from Snowflake to Databricks. They were already using Fivetran with Snowflake as the destination. Our guidance to them was simple: keep using Fivetran, just point it at Delta. In our migration playbook, we noted that for customers already using Fivetran, it's a no-brainer to continue – Fivetran is a fantastic tool that works excellently within the Databricks ecosystem. We helped them set up Databricks as a new destination in Fivetran, recreated the connections, and in short order, all their data was syncing into Databricks. The switch was painless.

Fivetran even allowed a phased approach: for a while, data landed in both Snowflake and Databricks, until Databricks took over full production duties. This dual-write capability gave everyone confidence because there was zero downtime and no data loss during cutover. We've described this approach in our migration guides – essentially re-pointing the Fivetran pipelines to Databricks and letting it handle the rest. It's hard to imagine doing that so smoothly with a homegrown solution.

Confidence in the Databricks + Fivetran Ecosystem

Looking back at our journey, I can say partnering with Fivetran has been one of the best decisions for SunnyData and our customers. It wasn't just about adding another tool to our toolbox – it was about embracing a modern approach to data integration that aligns perfectly with the modern data platform architectures we build on Databricks. The Databricks + Fivetran ecosystem means we can deliver a full-stack solution: an agile lakehouse platform powered by Databricks, fed continuously by Fivetran’s reliable pipelines.

What gives me confidence is knowing that this combination has been battle-tested. It's not theoretical or hype; we've validated it across real enterprises and seen how it accelerates time to insight, reduces maintenance overhead, and scales with growing data needs. Databricks provides the powerful analytics engine and governance, while Fivetran provides the connective tissue, seamlessly bringing in data from wherever it lives. Together, they let data teams focus on what truly matters – using data –instead of worrying about how to move and fix data.

As a data engineer at heart, I'm frankly relieved to have a tool like Fivetran in our arsenal. It means fewer late-night emergencies and more proud project deployments. Our team at SunnyData is excited about the future, knowing that we have this solid foundation. We are proud to recommend and support both Databricks and Fivetran as cornerstones of a modern, high-impact data stack.

In the end, the story is simple: we chose to partner with Fivetran because it lets us deliver simpler, faster, and more reliable data solutions. And after seeing the results first-hand, I can say with confidence, the Databricks + Fivetran duo is a game-changer for anyone looking to unlock the full potential of their data.

Here's to many more successful projects built on this ecosystem, and to never spending another Friday night fighting broken pipelines! 😅